Credit: Dreamstime

Credit: Dreamstime

Apache OpenWhisk

OpenWhisk is an open source serverless functions platform for building cloud applications. OpenWhisk offers a rich programming model for creating serverless APIs from functions, composing functions into serverless workflows, and connecting events to functions using rules and triggers.

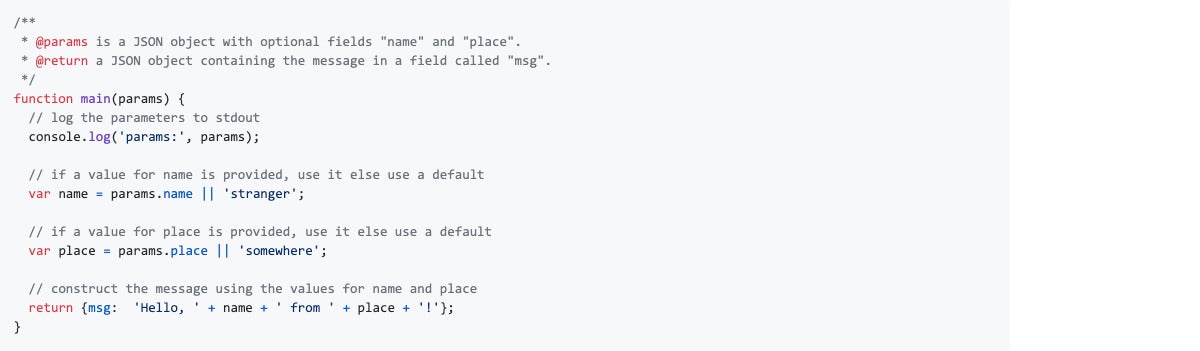

You can run an OpenWhisk stack locally, or deploy it to a Kubernetes cluster, either one of your own or a managed Kubernetes cluster from a public cloud provider, or use a cloud provider that fully supports OpenWhisk, such as IBM Cloud. OpenWhisk currently supports code written in Ballerina, Go, Java, JavaScript (see screenshot below), PHP, Python, Ruby, Rust, Swift, and .NET Core; you can also supply your own Docker container.

The OpenWhisk project includes a number of developer tools. These include the wsk command line interface to easily create, run, and manage OpenWhisk entities; wskdeploy to help deploy and manage all your OpenWhisk Packages, Actions, Triggers, Rules, and APIs from a single command using an application manifest; the OpenWhisk REST API; and OpenWhisk API clients in JavaScript and Go.

IDG

IDGFission

Fission is an open source serverless framework for Kubernetes with a focus on developer productivity and high performance. Fission operates on just the code: Docker and Kubernetes are abstracted away under normal operation, though you can use both to extend Fission if you want to.

Fission is extensible to any language. The core is written in Go, and language-specific parts are isolated in something called environments. Fission currently supports functions in Node.js, Python, Ruby, Go, PHP, and Bash, as well as any Linux executable.

Fission maintains a pool of “warm” containers that each contain a small dynamic loader. When a function is first called, i.e. “cold-started,” a running container is chosen and the function is loaded. This pool is what makes Fission fast. Cold-start latencies are typically about 100 milliseconds.

Knative

Knative, created by Google with contributions from more than 50 different companies, delivers an essential set of components to build and run serverless applications on Kubernetes. Knative components focus on solving mundane but difficult tasks such as deploying a container, routing and managing traffic with blue/green deployment, scaling automatically and sizing workloads based on demand, and binding running services to eventing ecosystems. The Google Cloud Run service is built from Knative.

Kubeless

Kubeless is an open source Kubernetes-native serverless framework designed for deployment on top of a Kubernetes cluster, and to take advantage of Kubernetes primitives. Kubeless reproduces much of the functionality of AWS Lambda, Microsoft Azure Functions, and Google Cloud Functions. You can write Kubeless functions in Python, Node.js, Ruby, PHP, Golang, .NET, and Ballerina. Kubeless event triggers use the Kafka messaging system and HTTP events.

Kubeless uses a Kubernetes Custom Resource Definition to be able to create functions as custom Kubernetes resources. It then runs an in-cluster controller that watches these custom resources and launches runtimes on-demand. The controller dynamically injects the code of your functions into the runtimes and makes them available over HTTP or via a publish/subscribe mechanism.

OpenFaaS

OpenFaaS is an open source serverless framework for Kubernetes with the tag line “Serverless functions made simple.” OpenFaaS is part of the “PLONK” stack of cloud native technologies: Prometheus (monitoring system and time series database), Linkerd (service mesh), OpenFaaS, NATS (secure messaging and streaming), and Kubernetes. You can use OpenFaaS to deploy event-driven functions and microservices to Kubernetes using its Template Store or a Dockerfile.

How to choose a cloud serverless platform

With all those options, how can you choose just one? As with almost all questions of software architecture, it depends.

To begin with, you should evaluate your existing software estate and your goals. An organisation that is starting with legacy applications written in Cobol and running on in-house mainframes has a very different path to travel than an organisation that has an extensive cloud software estate.

For organisations with a cloud estate, it’s worth making a list of your deployments, the clouds they use, and which availability zones. It’s also worth understanding the locations of your customers and users, and the usage patterns for your services.

For example, an application that is used 24/7 at a consistent load level isn’t likely to be a good candidate for serverless deployment: A properly sized server, VM, or cluster of containers might be cheaper and easier to manage. On the other hand, an application that is used at irregular intervals and widely varying scales and is triggered by an important action (such as a source code check-in) might be a perfect candidate for a serverless architecture.

A service with low latency requirements that is used all over the world might be a good candidate for deployment at many availability zones or edge points of presence. A service used only in Washington, DC, could be deployed in a single availability zone in Virginia.

A company that has experience with Kubernetes might want to consider one of the open-source serverless platforms that deploy to Kubernetes. An organisation lacking in Kubernetes experience might be better off deploying to a native cloud FaaS infrastructure, whether or not the framework is open source, such as the Serverless Framework, or proprietary, such as AWS Lambda, Google Cloud Functions, or Azure Functions.

If the serverless application you’re building depends on a cloud database or streaming service, then you should consider deploying them on the same cloud to minimise the intra-application latencies. That doesn’t constrain your choice of framework too much. For example, an application that has a Google Cloud Bigtable data store can have its serverless component in Google Cloud Functions, Google Cloud Run, Serverless Framework, OpenWhisk, Kubeless, OpenFaaS, Fission, or Knative, and still likely show a minimum latency.

In many cases your application will be the same or similar to common use cases. It’s worth checking the examples and libraries for the serverless platforms that you’re considering to see if there’s a reference architecture that will do the job for you. It’s not that most functions as a service require writing a lot of code: Rather, code reuse for FaaS takes advantage of well-tested, proven architectures and avoids the need to do a lot of debugging.