Review: Microsoft Azure AI and Machine Learning aims for the enterprise

- 21 January, 2021 15:50

Microsoft has a presence in most enterprise development and IT shops, so it’s not a surprise that the Azure AI and Machine Learning platform has a presence in most enterprise development, data analysis, and data science shops. Enterprise AI often has demanding requirements, and the Azure offerings do their best to meet them.

Azure AI and Machine Learning includes 17 cognitive services, a machine learning platform pitched at three different skill levels, cognitive search, bot services, and Azure Databricks, an Apache Spark product optimized for the Azure platform and integrated with other Azure services.

Rather than force companies to run all Azure services on the Azure platform, Microsoft also offers several Docker containers that allow companies to use AI on premises. These support a subset of Azure Cognitive Services, and allow companies to run the cognitive services within their firewall and near on-prem data, which is a sine qua non for companies with tight data security policies and companies subject to restrictive data privacy regulations.

Responsible AI was in the news recently, although not in a good way, when Google fired Timnit Gebru. Earlier in 2020, the Responsible AI news was more positive, as various companies introduced tools to promote more responsible machine learning. Microsoft, for example, added interpretability features to its Azure Machine Learning product, and also released three Responsible AI projects as open source: FairLearn, InterpretML, and SmartNoise.

Fairlearn contains mitigation algorithms as well as a Jupyter widget for model assessment, and has been integrated into a Fairness panel in Azure Machine Learning. InterpretML helps you understand your model’s global behavior, or understand the reasons behind individual predictions, and has been integrated into an Explanation dashboard in Azure Machine Learning. The SmartNoise project, in collaboration with OpenDP, aims to make differential privacy broadly accessible to future deployments by providing several basic building blocks that can be used by people involved with sensitive data. You can bring SmartNoise into a Python notebook by installing and importing the project, and adding a few calls to fuzz your sensitive data.

There is a plethora of frameworks and tools in use in the machine learning, deep learning, and AI world. While Azure AI supports dozens of these directly, there are hundreds more, which Azure handles by providing or allowing integration. Some, such as MLflow, integrate as Python packages; others, such as Pachyderm, integrate as containers, often on Kubernetes (AKS).

IDG

IDG

As shown in this Azure screen shot, the Azure AI and Machine Learning product includes cognitive services, machine learning, cognitive search, bot service, and Databricks.

Azure Cognitive Services

Microsoft describes Azure Cognitive Services as “a comprehensive family of AI services and cognitive APIs to help you build intelligent apps,” and claims to have the “most comprehensive portfolio of domain-specific AI capabilities on the market,” although its competitors might disagree with that assessment. Azure Cognitive Services are aimed at developers who want to incorporate machine learning into their applications.

The services cover four areas: decision support, language, speech, and vision. Web search used to be included under Cognitive Services, but it has moved to another area, and I won’t cover it.

In general, Azure Cognitive Services don’t need to be trained, at least at the level you’d expect from Azure Machine Learning. Some Azure Cognitive Services do allow customization, but you don’t need to understand machine learning in order to accomplish that. Almost all Azure Cognitive Services have a free trial tier.

Decision support

The decision support area of Azure Cognitive Services includes an anomaly detector service, a content moderator, a metrics advisor, and a personalizer.

Anomaly Detector

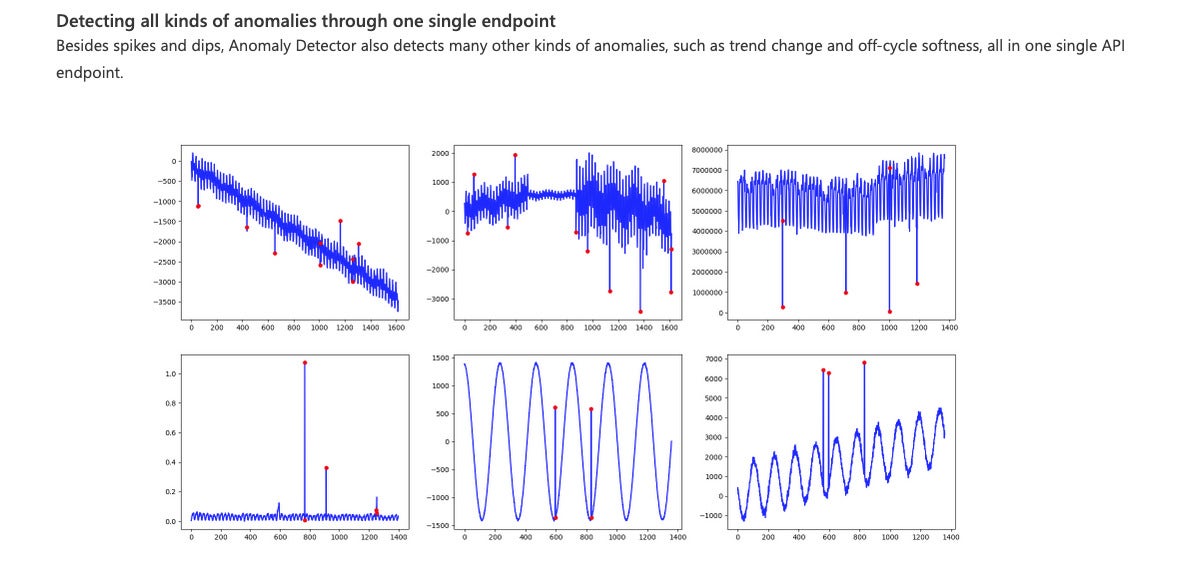

With the Anomaly Detector service, you can embed anomaly detection capabilities into your apps so that users can quickly identify problems as soon as they occur. No experience with machine learning is required. Through an API, Anomaly Detector ingests time series data of all types and selects the best-fitting anomaly detection model for your data to ensure high accuracy. You can customize the service to your business’s risk profile by adjusting one parameter. You can run Anomaly Detector anywhere from the cloud to the intelligent edge.

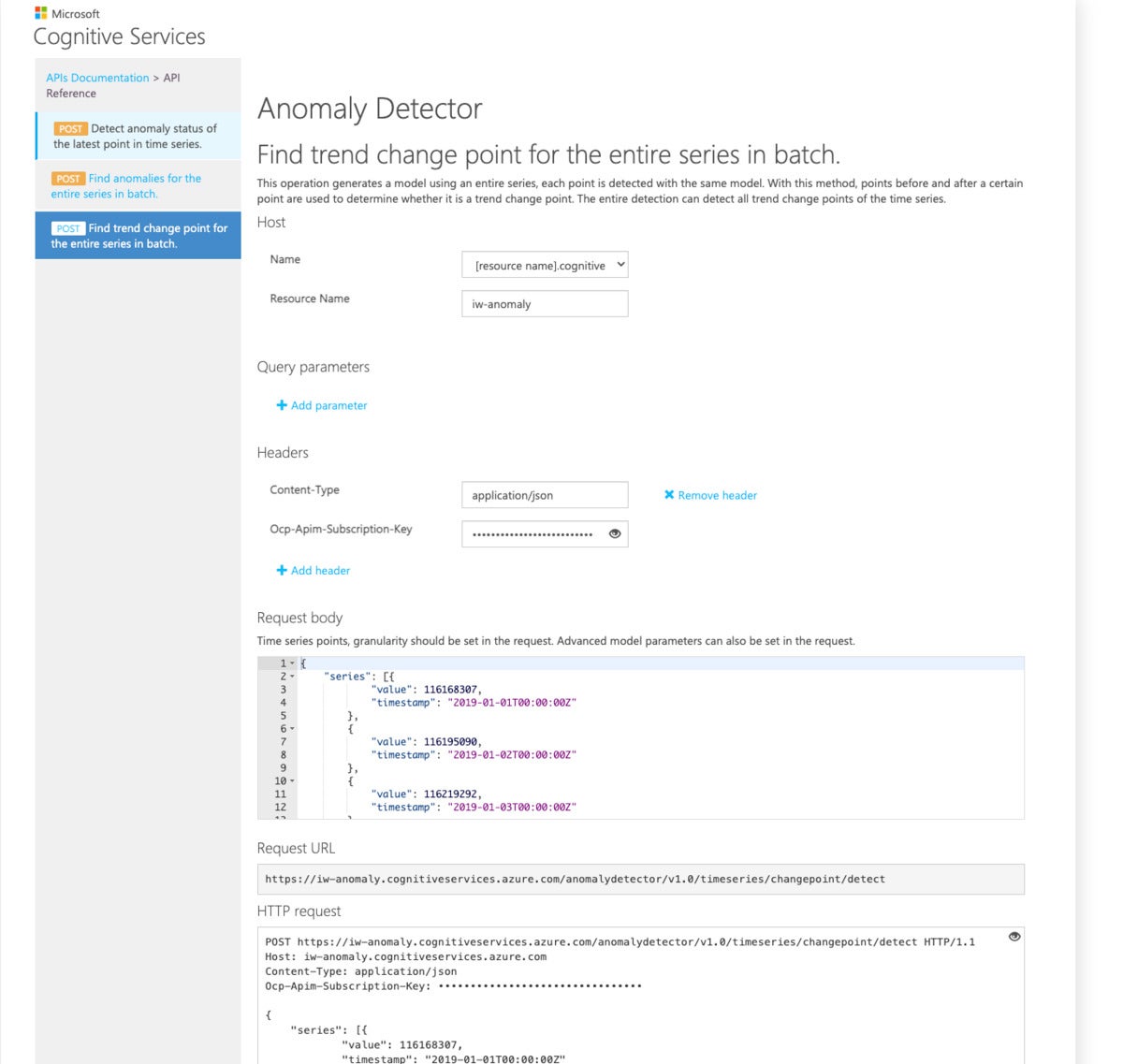

There are three endpoints to an Anomaly Detector service: Detect the anomaly status of the latest point in the time series; find anomalies for the entire series in batch; and find trend change points for the entire series in batch. Under the covers, there are six algorithms for anomaly detection, used in three ensembles depending on the granularity and seasonality of the data; the service performs the selection automatically. The only parameter a user needs to adjust is the sensitivity.

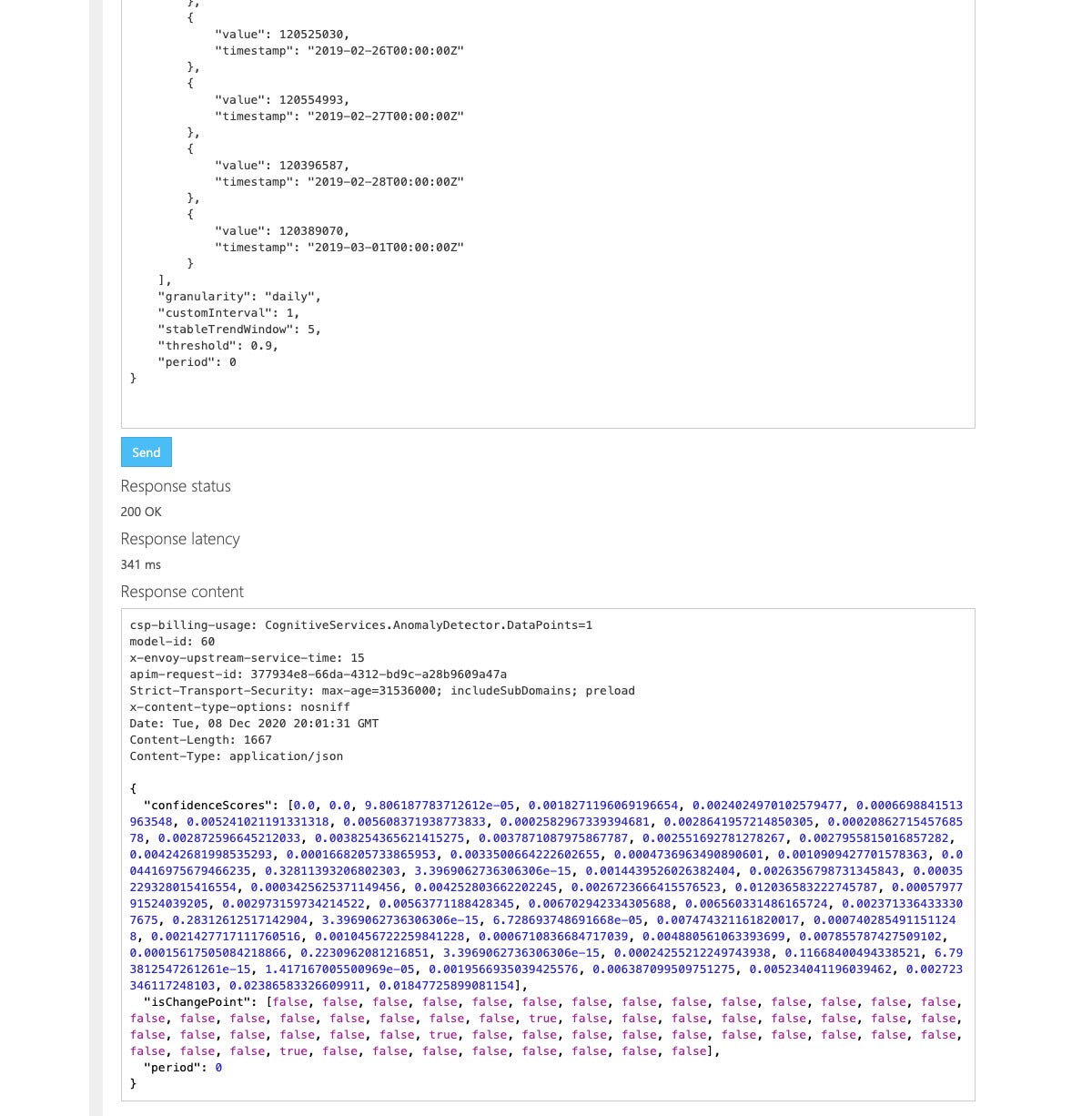

I tested all three endpoints in the Azure console using sample data against a free Anomaly Detector service that I created. For each test I had to fill in the service name (iw-anomaly) and subscription key. The most interesting of the three was the trend change points, shown below. The third screenshot below shows various kinds of anomalies that the service can detect.

In addition to console-based service testing, Azure supplies code samples using curl, C#, Java, JavaScript, Objective-C, PHP, Python, and Ruby. There are also quick starts in C#, Python, and Node.js.

IDG

IDG

An anomaly detection request in the Azure console to find trend change points. This is a test dataset to be submitted to my free service instance.

IDG

IDG

An anomaly detection service response to the previous request. I count three change points detected.

IDG

IDG

Six examples of anomalies that can be detected by the Azure Anomaly Detector service.

Content Moderator

The Content Moderator service, designed to help administrate social media, product review sites, and games with user-generated content, performs image moderation, text moderation, video moderation, and optional human review for predictions with low confidence or mitigating context.

The image moderation service scans images for adult or racy content, detects text in images using optical character recognition (OCR), and detects faces.

There are two text moderation APIs, one standard and one for customization. The standard API returns information about:

- Profanity: Term-based matching with built-in list of profane terms in various languages.

- Classification: Machine-assisted classification into three categories, ranging from sexually explicit to potentially offensive in some circumstances. This service can also recommend when human review should be performed.

- Personal data: Email address, SSN, IP address, phone, and mailing address.

- Auto-corrected text: Correction of frequent typos.

- Original text: Uncorrected text with typos.

- Language: An optional parameter that defaults to English.

The custom term list management API allows you to create and manage up to five lists of up to 10K terms each to augment the standard terms lists used by the content moderator text API.

The video moderation service scans videos for adult or racy content and returns time markers for said content. It also returns a flag saying whether it recommends a human review at each detected event.

Microsoft recommends the Content Moderator review tool website for working with the Content Moderator service, to provide a single interface for image, text, and video moderation reviews.

Metrics Advisor

The Metrics Advisor service builds on the Anomaly Detector service to monitor your organization’s growth engines, from sales revenue to manufacturing operations, in near-real time. It also adapts models to your scenario, offers granular analysis with diagnostics, and alerts you to anomalous events.

Personalizer

Personalizer is an AI service that delivers a personalized, relevant experience for every user. It uses reinforcement learning to optimize its model for your goals, and has an “apprentice” mode that only lets Personalizer interact with users after the service reaches a specified level of confidence in matching the performance of your existing solution.

Personalization can be an ethical minefield. Microsoft has guidelines for using Personalizer ethically, which cover the choice of use cases, building reward functions, and choosing features for personalization. As far as I can tell, neither Microsoft nor anyone else actually enforces those guidelines, although there are regulations about privacy (among other areas) that you could easily violate if you chose to ignore the guidelines and implement scenarios that could cause the user harm, such as personalizing offers on loan, financial, and insurance products, where risk factors are based on data the individuals don’t know about, can’t obtain, or can’t dispute.

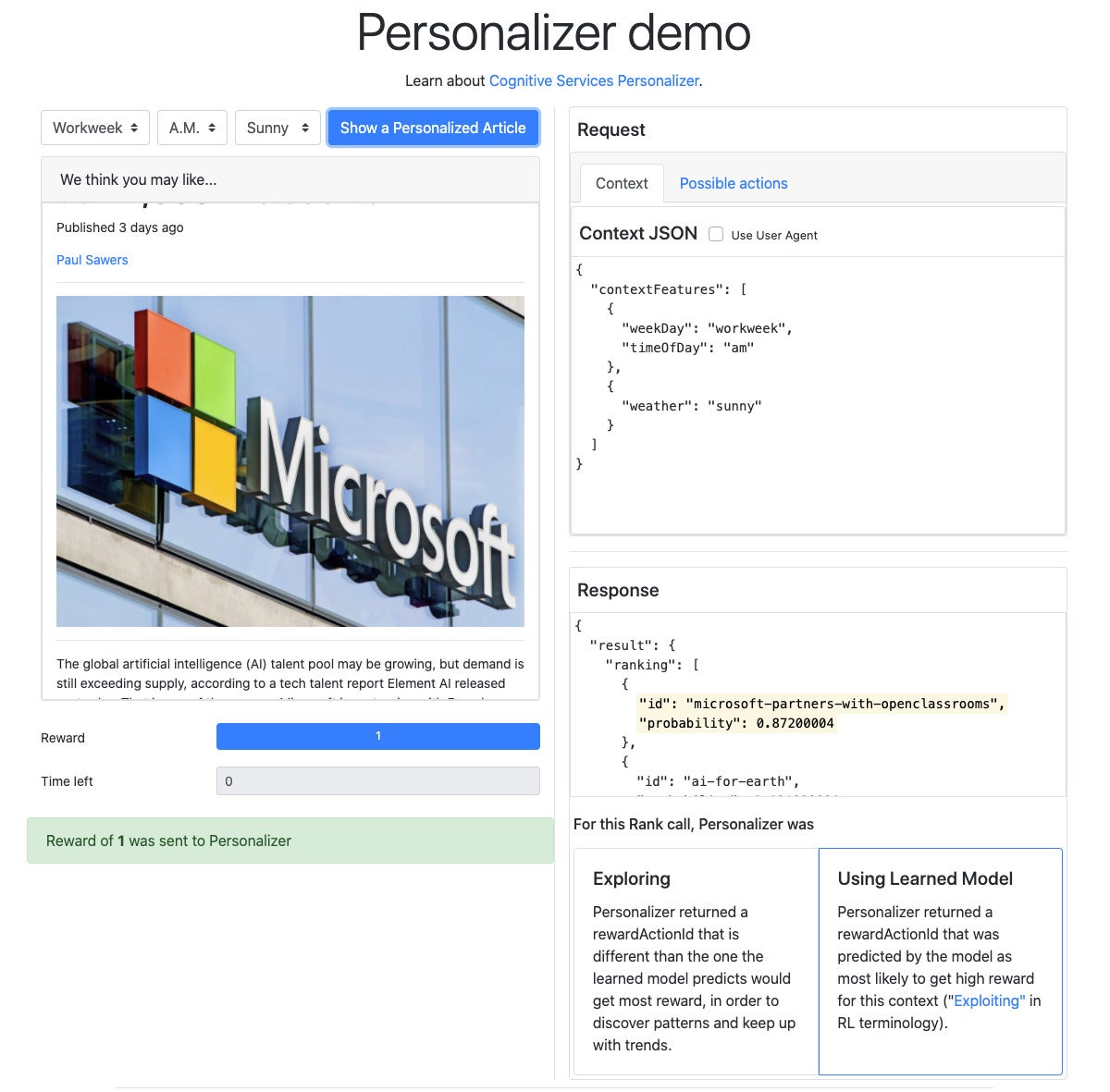

The quickest way to understand the Personalizer service is probably to run the interactive Personalizer demo website, shown below.

IDG

IDG

In this interactive Personalizer demo, the user can set several parameters and scroll through the article presented to generate a ranking for that article. Personalizer uses a reinforcement learning (RL) model to generate rankings, and it can update the model continuously.

Language

The language area of Azure Cognitive Services includes an immersive reader, a language understanding service, a conversational question and answer layer for your data, text analytics, and a language translator.

Immersive Reader

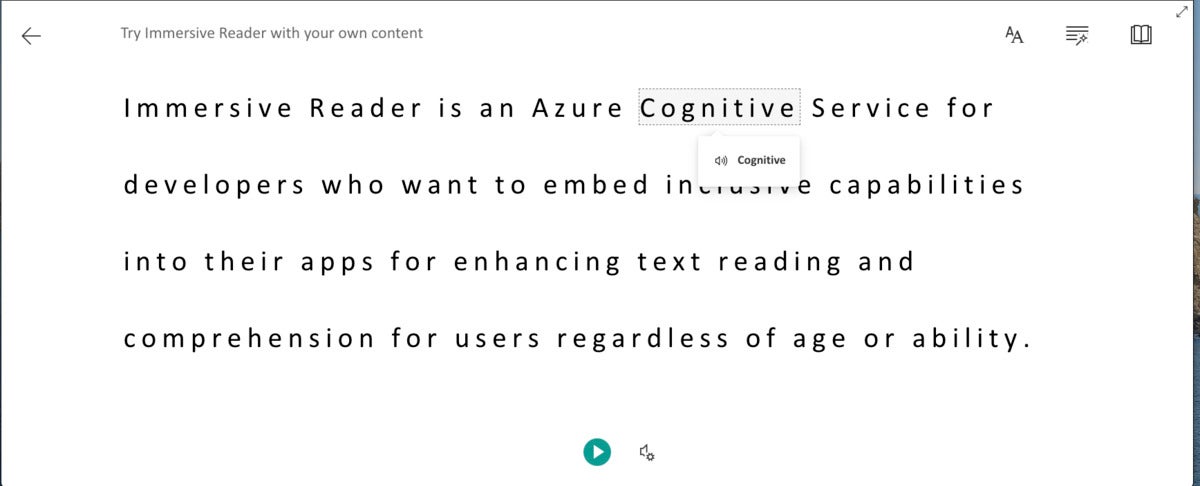

One consequence of the 2020 pandemic has been the need to attend school remotely, which doesn’t work well for all students. Immersive Reader lets you embed text reading and comprehension aids—i.e., audio and visual cues—into applications and websites with one line of code. You can help users of any age and reading ability with features like reading aloud, translating languages, and focusing attention through highlighting and other design elements.

Azure is the only major cloud provider offering this type of reading technology. Immersive Reader supports users with varying abilities and differences—including dyslexia, ADHD, autism, and cerebral palsy—as well as emerging readers and non-native speakers.

IDG

IDG

Immersive Reader helps readers at all levels read and comprehend text. Here we have selected the word “cognitive” to hear it pronounced. The “play” icon at the bottom of the screen speaks the entire text.

Read more on the next page...

Page Break

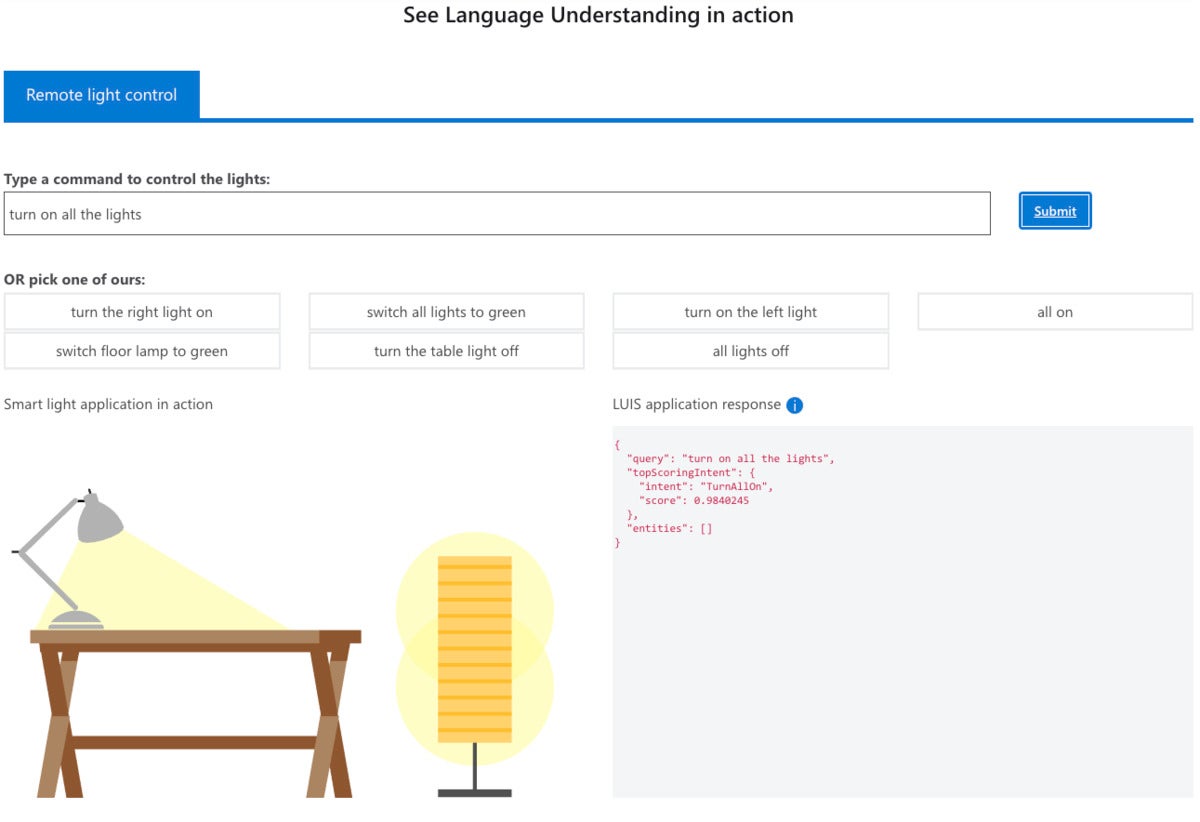

Language Understanding

The Azure Language Understanding service, also call LUIS (the “I” stands for intelligent), allows you to define intents and entities and map them to words and phrases, then use the language model in your own applications. You can use prebuilt domain language models and also build and use customized language models. You can build the model with the authoring APIs, or with the LUIS portal, or both. The process of training LUIS models uses machine teaching, which is simpler than conventional machine learning training, and a LUIS model also improves continuously as the model is used. LUIS supports both text and speech input.

IDG

IDG

The Language Understanding service, also called LUIS, allows you to use prebuilt domain language models and to build and use customized language models. Here we are using a limited vocabulary of intents and entities to control a picture in a web demo.

QnA Maker

QnA Maker lets you create a conversational question-and-answer layer over your existing data. You can use it to build a knowledge base by extracting questions and answers from your semi-structured content, including FAQs, manuals, and documents. You can answer users’ questions with the best answers from the QnAs in your knowledge base automatically. In addition, your knowledge base gets smarter as it continually learns from user behavior.

In addition to web Q&A, you can create and publish a bot in Teams, Skype, or elsewhere. You can also make your bot more conversational by adding a pre-populated chit-chat dataset at a range of (in)formality levels from professional to enthusiastic.

QnA Maker is often implemented behind a Language Understanding service. QnA Maker itself requires an App Service and a Cognitive Search service.

Text Analytics

Text Analytics is an AI service that uncovers insights such as sentiment, entities, relations, and key phrases in unstructured text. You can use it to identify key phrases and entities such as people, places, and organizations, to understand common topics and trends. A related service, Text Analytics for Health (currently in preview), allows you to classify medical terminology using domain-specific, pretrained models. You can gain a deeper understanding of customer opinions with sentiment analysis, and evaluate text in a wide range of languages.

Microsoft offers extensive transparency notes for its Text Analytics services, which ties into Microsoft’s responsible AI principles. It also promises privacy, in that it doesn’t use the training performed on your text to improve Text Analytics models. If you choose to run Text Analytics in containers, you can control where Cognitive Services processes your data.

The alternative to running Text Analytics in containers is making synchronous calls to the service’s REST API, or using the client library SDK. In November 2020 Azure added a new preview Analyze operation for users to analyze larger documents asynchronously, combining multiple Text Analytics features in one call.

IDG

IDG

You can understand Text Analytics using this web demo, which is unfortunately not interactive. There are Text Analytics Quickstarts you can run in C#, Python, JavaScript, Java, Ruby, and Go.

Translator

Bing Translator once ran a distant second to Google Translate for quality and speed of translation, as well as in numbers of supported language pairs. That’s no longer the case for quality and speed, although as of December 2020 Google Translate supports 109 languages, compared to Microsoft’s “more than 70.” (Both translate Klingon, at least in text and using the API, should you happen to care.)

Azure’s Translator service not only provides stock translations, but also allows you to build custom models for domain-specific terminology, although using customized translation models is a little more expensive than using stock models. Microsoft doesn’t share the custom training performed on your training material or use it to improve Translator quality, nor does it log your API text input during translation, even though it does use consumer feedback from Bing Translate to improve stock Translator quality.

Translator is the same service that powers translations in all of Microsoft’s products, such as Word, PowerPoint, Teams, Edge, Visual Studio, and Bing, not to mention the Microsoft Translator app. In addition to being available as a cloud service, Translator can be downloaded to run locally on Edge devices for some languages.

I created a free-tier Translator service without any problems. I didn’t learn much more from testing it programmatically, however, than I did from exercising Bing Translator on the web or the Microsoft Translator app on Android. Bing Translator and Microsoft Translator also support speech and vision for some languages.

IDG

IDG

Bing Translator, shown here, and the Microsoft Translator mobile app both use the Azure Translator service, although the app may run the Edge version of Translator on the mobile device. Here I’ve spoken “sumimasen,” a rather flexible Japanese apology, which the site correctly transcribed into Hiragana and translated as “Excuse me” as well as “I am sorry” and “Pardon me.”

Speech

The Speech area of Azure Cognitive Services includes speech recognition, text to speech, speech translation, and speaker recognition.

The Speech SDK supports C#, C++, Java, JavaScript, Objective-C, Python, and Swift for both speech recognition and speech generation, and Go for recognition only. It exposes many features from the Speech service, but not all of them. The Speech SDK also supports the Speech Translation API, which can translate speech input to a different language with a single call.

Speech Studio is a customization portal for Speech. It claims to supply all the tools you need to transcribe spoken audio to text, perform translations, and convert text to lifelike speech.

Speech to Text

Microsoft describes its Speech to Text service as allowing you to quickly and accurately transcribe audio to text in more than 85 languages and variants. The latter include six variants of English, seven variants of Arabic, and two variants each of French, Portuguese, and Spanish. You can also customize speech recognition models to enhance accuracy for domain-specific terminology, and combine transcriptions with language understanding or search services.

IDG

IDG

A test of Azure Speech to Text in US English. The system had no trouble recognizing my dictation nor in adding correct sentence capitalization and punctuation.

Text to Speech

Azure’s Text to Speech service allows you to build apps and services that speak naturally, choosing from more than 200 voices and over 50 languages and variants. Some of the 50 languages and variants don’t have corresponding speech recognition support.

You can differentiate your brand with a customized voice, and access voices with different speaking styles and emotional tones to fit your use case. Standard voices don’t sound as natural as neural voices, but neural voices cost four times as much to use. Azure neural voices compete with Google WaveNet voices.

IDG

IDG

This is the test panel for Azure Text to Speech. It supports all the available languages and variants (50 total) and all the available voices for each variant (200 total counting all variants), both standard and neural (high quality). Neural voices support several speaking styles in addition to general, such as newscast and customer service.

Speech Translation

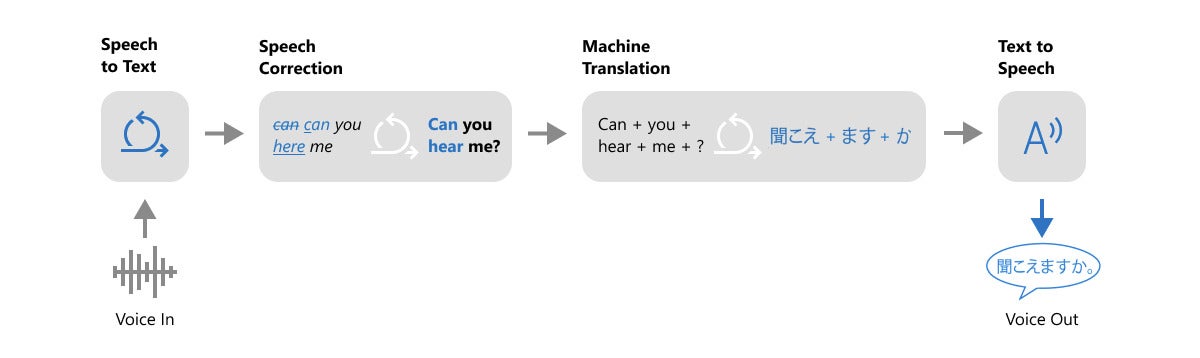

The Speech Translation service allows you to translate audio from more than 30 languages and customize your translations for your organization’s specific terms. The service essentially combines speech to text for the source language, language translation, and text to speech for the target language.

IDG

IDG

Azure Speech Translation works in stages. First, it recognizes speech, corrects it, and adds punctuation. Then it translates the text. Finally, it speaks the translated text, for languages with speech support.

Speaker Recognition

The Speaker Recognition service, currently in preview, works in two use cases. For identification, it matches the voice of an enrolled speaker from within a group, which is useful in transcribing conversations. For verification, it can either use pass phrases or free-form voice input to verify individuals for secure customer engagements.

Vision

This area includes computer vision, custom vision, face detection, form recognition, and video indexing.

Computer Vision

The Computer Vision service includes a bunch of capabilities for analyzing still images and video. These include optical character recognition (OCR), digital asset management (DAM), tagging visual features, object detection (with bounding boxes), commercial brand detection, categorizing images with a taxonomy, description generation, face detection, image type detection, domain-specific content detection, color scheme detection, thumbnail generation, area of interest detection (with bounding boxes), and adult content detection.

Spatial analysis analyzes video for events such as people detection (with bounding boxes), people tracking (from frame to frame), and region of interest (for example, person crossing a line or entering a zone). Spatial analysis is in a gated preview that requires an application.

IDG

IDG

The Azure Computer Vision service identifies objects and their bounding boxes and reads text from images and video.

Custom Vision

Transfer learning is a quick way to customize an image model. Custom Vision uses transfer learning to create custom image models from just a few tagged images—not the thousands of images you might expect to need. It can also help you with untagged images. As you add more images, the model keeps improving.

IDG

IDG

Custom Vision can train on a small number of tagged, or even untagged images. Adding more images improves the model’s accuracy.

Face

The Face service includes face detection that perceives faces and attributes in an image; person identification that matches an individual in your private repository of up to 1 million people; perceived emotion recognition that detects a range of facial expressions like happiness, contempt, neutrality, and fear; and recognition and grouping of similar faces in images.

IDG

IDG

Azure Face Verification returns the probability that two faces match.

Form Recognizer

Form Recognizer applies advanced machine learning to accurately extract text, key/value pairs, and tables from documents. With surprisingly few samples (the examples given used five exemplars for each custom document type), Form Recognizer tailors its understanding to your custom documents, both on-premises and in the cloud. It also has several pre-built models, such as for layouts, invoices, sales receipts, and business cards.

IDG

IDG

The Form Recognizer service can extract text from documents and forms.

Video Indexer

With the Video Indexer service, you can automatically extract metadata—such as spoken words, written text, faces, speakers, celebrities, emotions, topics, brands, and scenes—from video and audio files. Then you can access the data within your application or infrastructure or make it more discoverable.

IDG

IDG

Azure Video Indexer automatically extracts metadata from video and audio. Here it has extracted people, topics, and labels from videos of a Microsoft event.

Web search

The Bing Search APIs used to be here, under Cognitive Services. They are now under Bing.

Azure Machine Learning

While Azure Cognitive Services are primarily aimed at software developers, Azure Machine Learning is primarily aimed at data scientists. There’s a lot of overlap, of course: Data scientists may well choose to use Cognitive Services if they already work well for the application at hand or can be customized with transfer learning, and programmers may find that they are comfortable using Jupyter Notebooks to build models for cases where Cognitive Services fall short. Even business analysts without machine learning experience may manage to build models with AutoML or using the Azure Machine Learning drag-and-drop designer.

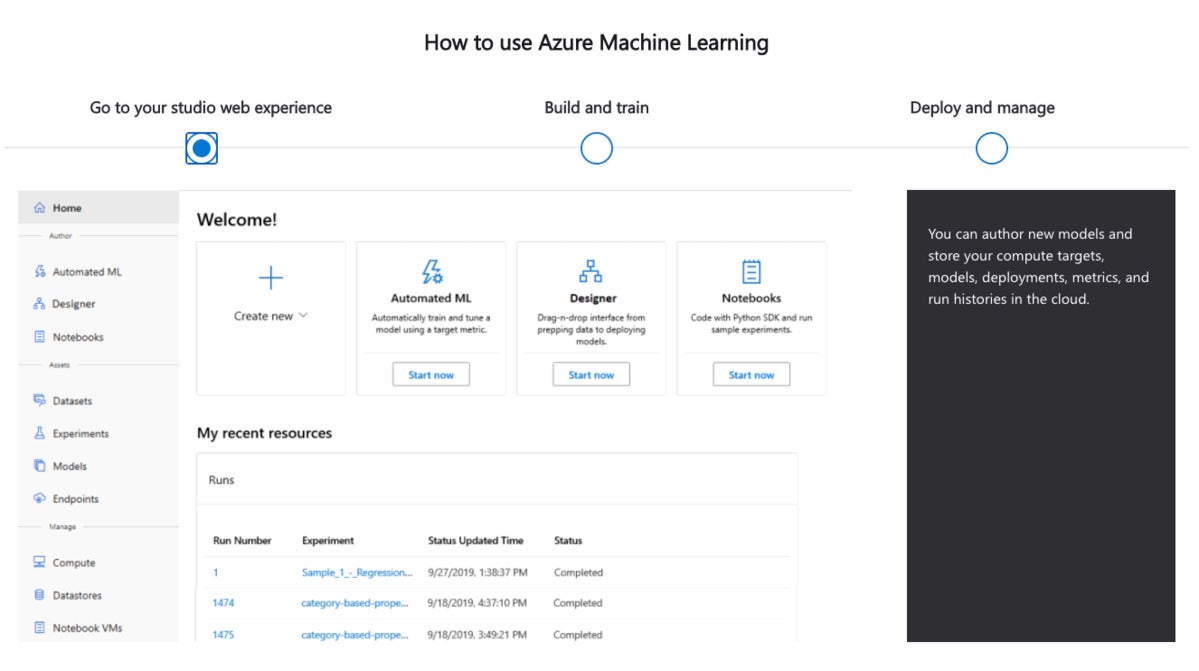

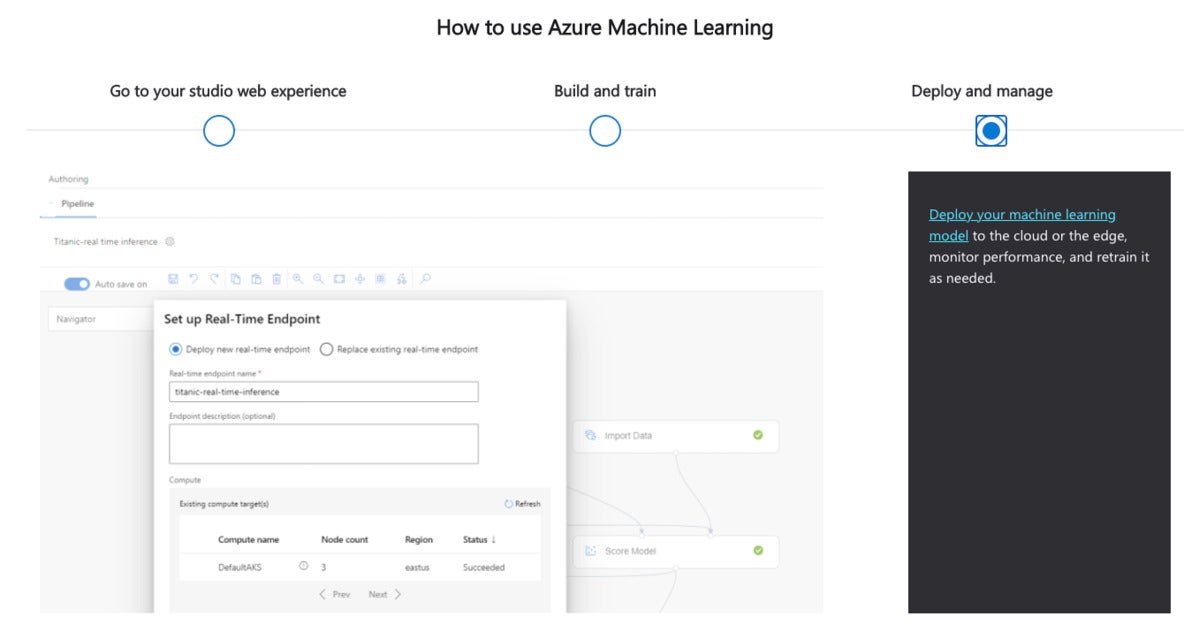

Microsoft maintains that Azure Machine Learning accelerates the end-to-end machine learning lifecycle. That doesn’t mean that you are required to use Azure Machine Learning for everything—just that you can. You can also integrate third-party products and other Azure services with Azure Machine Learning. The first three screenshots below show Microsoft’s visual introduction to Azure Machine Learning, although the screens are slightly out of date. In particular, the first intro screen lacks the Pipelines tab under Assets, shown in the fourth screen below.

IDG

IDG

The Studio portion of Azure Machine Learning organizes and manages the entire machine learning lifecycle. Note the three choices for model building: AutoML, a GUI designer, and Azure Notebooks.

IDG

IDG

One of the three choices for model building is AutoML. This screen allows you to manage recent automated machine learning runs.

IDG

IDG

Once you have an acceptable model, you can deploy it to the Azure cloud or edge. You can monitor it over time and then retrain it when the data drifts.

IDG

IDG

The current version of Azure Machine Learning includes a Pipelines tab under Assets.

Read more on the next page...

Page Break

The last time I wrote about Azure Machine Learning, the old drag-and-drop designer had been deprecated, and there was a new emphasis on Python programming and Azure (Jupyter) Notebooks, essentially raising the bar on skills. The designer has now been revamped, and both the designer and AutoML given equal emphasis to Notebooks. Another improvement is the addition of R support and RStudio Server integration.

Microsoft’s own high-level summary of Azure ML emphasizes “productivity for all skill levels,” end-to-end MLOps, state-of-the-art responsible ML, and “best-in-class support for open-source frameworks and languages including MLflow, Kubeflow, ONNX, PyTorch, TensorFlow, Python, and R.” As key service capabilities, Microsoft mentions collaborative notebooks, automated machine learning, drag and drop machine learning, data labeling, MLOps, autoscaling compute, RStudio integration, deep integration with other Azure services, reinforcement learning, responsible ML, enterprise-grade security, and cost management.

Notebooks

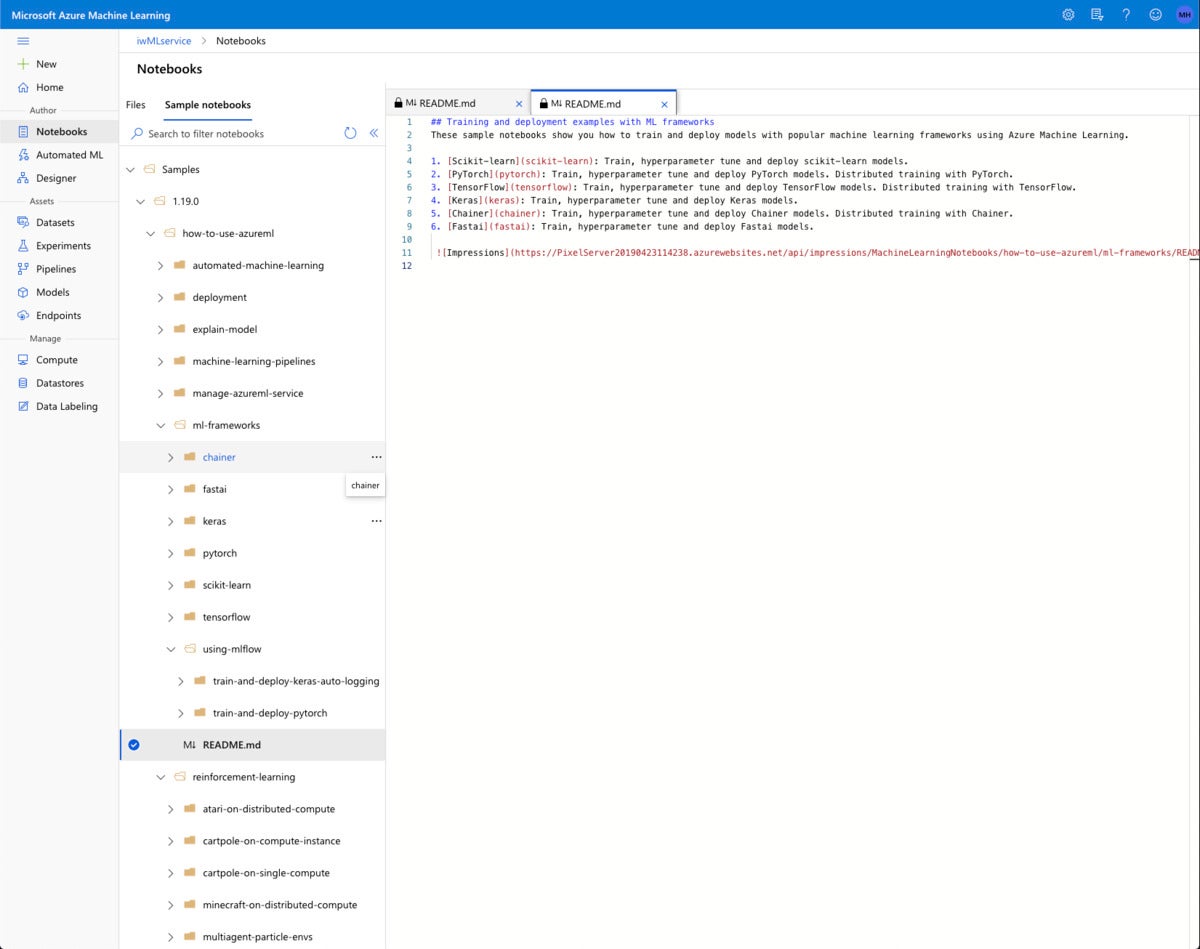

Rather than create a notebook from scratch for the purposes of this review, I started with a Microsoft sample notebook.

IDG

IDG

There are many more Azure sample notebooks now than when I looked at them in 2019. Among other improvements, they now cover more frameworks and reinforcement learning.

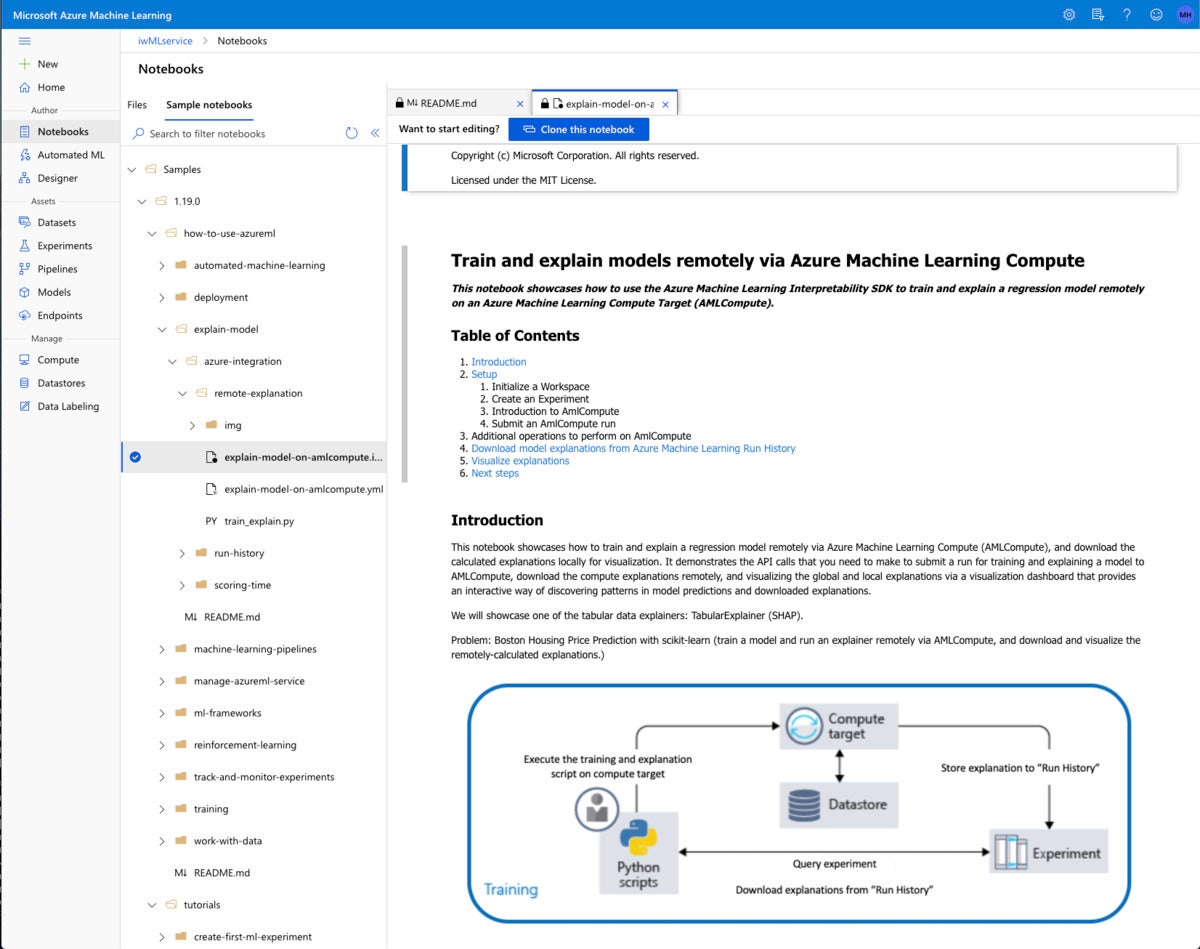

I chose one of the sample notebooks that demonstrate explainable machine learning. This is only one of the new capabilities of Azure Notebooks. Others include forecasting and reinforcement learning.

IDG

IDG

The remote-explanation sample demonstrates how to train and explain models remotely using the Azure Machine Learning Interpretability SDK.

To actually run a sample notebook, you need to clone it into your own directory and add a compute resource instance.

IDG

IDG

For the notebook itself, I picked a two-core general purpose VM with 7 GB of RAM, which costs $0.15 per hour to run.

This notebook creates an additional compute resource to run the model, a remote cluster of up to four nodes. Then it submits a script to the cluster, specifying dependencies of Pandas and Scikit-learn, and waits for the script to complete.

This run took 5 minutes 39 seconds. Much of that time was the five minutes spent waiting for the remote resource to spin up, which was annoying—but that’s the price you pay for creating compute resources on demand and releasing them when they are idle.

Finally, the notebook downloads the results from the remote cluster and lets you analyze them with the explanation dashboard. The What-if tool is comparable to Google’s, but not limited to TensorFlow models. This particular example builds a Scikit-learn model.

IDG

IDG

This is the Explanation Dashboard viewed in a separate browser tab. There are four screens in the dashboard; this is the individual feature importance and what-if screen, showing real datapoints at left and the entry form for what-if datapoints at right.

Azure Machine Learning notebooks currently have pre-release support for R and RStudio. I was able to create an RStudio environment from the Compute tab (see the first screenshot below) and clone the sample vignettes by following the instructions in the tutorial. I ran into trouble at the “load your workspace” stage, but fixed it by reinstalling the Azure Machine Learning SDK for R from GitHub. Unfortunately, I ran into more bugs at the create_estimator() call that I couldn’t fix, and gave up. (See the second screenshot below.) Oh, well: It was advertised as pre-release, and it was.

IDG

IDG

Individual compute instances are typically used for notebooks. When an instance is running, you can use the appropriate Application URI link to launch a JupyterLab, Jupyter, or RStudio environment. This particular instance was created without SSH privileges.

IDG

IDG

Azure offers pre-release support for RStudio Server open source. In going through this tutorial, I ran into a bug that appears to be a version mismatch.

Automated ML

Automated ML in Azure is not as new as it looks. As far as I can tell, it’s a nice user interface around the AutoMLConfig method that has been available in Azure Notebooks since at least early 2019. What is new under the covers? Forecasts, explanations, and featurization (automatic feature engineering).

I’ll note that Azure Automated ML currently only works on tabular or file data sets, just like Google AutoML Tables. DataRobot (see my review) is more flexible, and does AutoML using a mix of training data types, including images. While an Azure AutoML task of type image-instance-segmentation was added to the code in December 2020, I haven’t yet found it in the user interface or tutorials.

For fun, you can compare the Bike share AutoML forecasting tutorial with the notebook version of the same analysis. As a crusty old Python programmer, I prefer the notebook, but you may find the graphical interface easier for new AutoML projects.

IDG

IDG

This Azure notebook implements Auto ML forecasting. There is a similar tutorial using the automated machine learning interface.

Designer

The Azure Machine Learning Designer is a no-code/low-code graphical interface for creating pipelines. While it resembles the old Azure Machine Learning Studio interface, it uses the much more robust infrastructure now available in Azure Machine Learning, including clusters of VM-based nodes, which may have GPUs or FGPAs, as compute targets. The Designer’s graphical approach to pipeline creation is similar to KNIME, although KNIME has many more modules.

IDG

IDG

The Azure Machine Learning Designer allows you to drag and drop modules and other assets onto a canvas to create a machine learning pipeline with little or no code. This pipeline is a restaurant rating recommender provided as a sample. The Wide and Deep model, proposed by Google, jointly trains wide linear models and deep neural networks to combine the strengths of memorization and generalization.

Assets

With Azure Machine Learning datasets, you can keep a single copy of data in your storage referenced by datasets and access data during model training without worrying about connection strings or data paths.

Your machine learning experiments and the runs within each experiment are listed in the Experiments tab.

IDG

IDG

The Experiments tab lists the Azure Machine Learning experiments you have done and the runs within each experiment. If you include child runs and have done Auto ML experiments, you’ll see a lot more runs listed.

Pipelines show the Designer runs you’ve completed in the Pipeline runs tab, and diagrams you haven’t run in the Pipeline drafts tab. Azure Machine Learning pipelines can be published to get a REST endpoint that can be invoked from an external client. Clicking into a Pipeline brings up the diagram as an experiment run.

IDG

IDG

Experiment runs performed on Designer pipelines display as graphs. The Explanations and Fairness tabs are new.

Completed models are listed in the Models tab whether or not they have been deployed. You can deploy models to create web service endpoints, listed in the Endpoints tab.

Manage

You can manage your compute instances, compute clusters, inference clusters, and attached compute resources in the Compute tab. That’s a good place to check for running instances that you might want to kill before they accrue long-term charges.

Any dataset you register appears in the Datastores tab. The underlying storage may be Azure Blob storage or an Azure file share.

Azure Machine Learning data labeling gives you a central place to create, manage, and monitor labeling projects. Data labeling currently supports image classification, either multi-label or multi-class, and object identification with bounded boxes.

Data labeling tracks progress and maintains the queue of incomplete labeling tasks. This is a manual process with two optional phases of machine learning assistance: clustering and pre-labeling. The latter uses transfer learning, and may be available after only a few hundred images have been manually labeled if your categories are similar to the pre-trained model.

ONNX Runtime

The Open Neural Network Exchange (ONNX) can help optimize the inference of your trained machine learning model. Models from many frameworks, including TensorFlow, PyTorch, Scihit-learn, Keras, Chainer, MXNet, Matlab, and Spark MLlib, can be exported or converted to the standard ONNX format.

The ONNX Runtime is a high-performance inference engine for deploying ONNX models to production. It’s optimized for both cloud and edge and works on Linux, Windows, and Mac. Written in C++, it also has C, Python, C#, Java, and JavaScript (Node.js) APIs for usage in a variety of environments. ONNX Runtime supports both deep neural network and traditional machine learning models and integrates with accelerators on different hardware such as TensorRT on Nvidia GPUs, OpenVINO on Intel processors, and DirectML on Windows.

Azure Databricks

Azure Databricks provides hosted, optimized Apache Spark clusters running on Azure. Spark is a big data analytics service that includes its own machine learning libraries. Azure Databricks integrates with Azure Machine Learning, Azure Data Factory, Azure Data Lake Storage, and Azure Synapse Analytics.

Azure Cognitive Search

Azure Cognitive Search is a cloud search service with built-in AI capabilities that enrich all types of information, to identify and explore relevant content at scale. Formerly known as Azure Search, it uses the same integrated Microsoft natural language stack that Bing and Office have used for more than a decade, and AI services across vision, language, and speech. In other words, Microsoft Search uses Cognitive Search technology to offer software as a service for enterprise search within Microsoft products.

Azure Bot Service

Using bots is one way to automate or partially automate customer service and help desks. The Azure Bot Service and the Microsoft Bot Framework SDK help you to create bots and integrate them with Azure Cognitive Services. Once created, you can apply bots to channels including your website or apps, Microsoft Teams, Skype, Slack, Cortana, and Facebook Messenger.

Bots can be as sophisticated as you want to make them. You can start simple with informational bots, essentially front ends to your FAQ documents. Then you can add capabilities, for example by using the Azure Text Analytics and Language Understanding services to determine customer intent so that the bot can do customer escalations and help desk triage.

AI and machine learning on Azure

Overall, the Azure AI and Machine Learning platform—including the Azure Cognitive Services, Azure Machine Learning, the ONNX Runtime, Azure Databricks, Azure Cognitive Search, and Azure Bot Service—offers a wide range of cognitive services and a solid platform for machine learning that supports automatic machine learning, no-code/low-code machine learning, and Python-based notebooks. All of the services are very solid, and almost all services have a free tier for development and test. The only bug I found was in an experimental RStudio notebook, and that appeared to be a simple library version mismatch. (Yes, I reported the bug.)

I was impressed by the ability of Azure custom cognitive services to do transfer learning with single-digit numbers of samples, for example Custom Vision and Form Recognizer. I was also impressed by the progress the Azure Responsible AI offerings have made, although I suspect that there’s more work to be done in that area.

How does Azure AI compare to Google Cloud AI and Amazon’s AI and machine learning offerings? The quality and scope are similar; you can’t really go wrong with any of them. While I haven’t yet absorbed all of the improvements that AWS announced at re:Invent in December 2020, I can say that one differentiator between Azure and Google cognitive services is that Azure allows you to run cognitive service containers on premises, while Google currently lacks on-prem options.

—

Pricing

[All dollar values in USD unless otherwise stated]

Note: In general, production services have a 99.9% SLA.

Anomaly detection: 20K free transactions/month (10 calls/second dev/test); $0.314/1K transactions/month (80 calls/second production).

Content Moderator: 5K free transactions/month (1 transaction/second dev/test); $1/1K transactions/month (10 transactions/second production) + volume discounts over 1M transactions/month.

Metrics Advisor: No charge during preview.

Personalizer: 50K free transactions/month (10 GB storage); $1/1K transactions/month + volume discounts over 1M transactions/month.

Immersive Reader: No charge during preview.

Language Understanding: 10K free transactions/month; (5 TPS dev/test); $1.50/1K transactions/month text, $5.50/1K transactions/month speech (50 TPS production).

QnA Maker: 3 managed documents free each month (dev/test); $10 for unlimited managed documents. QnA also requires an App Service (free to $0.285/hour) and a Cognitive Search service (free to $7.667/hour).

Text Analytics: 5K free transactions/month; standard $1/1K text records with volume discounts; tiers from $74.71/month for 25K transactions to $5K/month for 10M transactions.

Translator: 2M chars free/month; $10 per million chars of standard translation, $40 per million chars of custom translation; standard translation tiers from $2K/month for 250M chars to $45K/month for 10B chars, custom translation tiers from $2K/month for 62.5M chars to $45K/month for 2.5B chars.

Speech to Text: 5 audio hours free per month, 1 concurrent request; $1 per audio hour, 20 concurrent requests.

Text to Speech: 5 million characters free per month standard, 0.5 million characters free per month neural; $4 per 1M characters standard, $16 per 1M characters neural, $100 per 1M characters neural long audio.

Speech Translation: 5 audio hours free per month; $2.50 per audio hour.

Computer Vision: 5K transactions free per month (20/minute); $1 to $1.50 per 1K transactions (10 TPS) with volume discounts.

Custom Vision: 1 hour training, 5K training images, and 10K predictions free/month (2 TPS); $20/compute hour training, $2 per 1K transactions, $0.70 per 1K images storage (10 TPS).

Face: 30K free transactions/month (20 TPM); $1 per 1K transactions with volume discounts (10 TPS).

Form Recognizer: 500 pages free per month; $10 to $50 per 1K pages depending on form type.

Video Indexer: 10 hours of free indexing to website users and 40 hours of free indexing to API users; $0.15/minute for video analysis, $0.04/minute for audio analysis.

Azure Machine Learning: $0.016 to $26.688/hour depending on number of CPUs, GPUs, and FPGAs, and amount of RAM; storage costs additional. VM costs only; No extra charge for machine learning service.

Azure Databricks: Combination of VM and service costs. See pricing details.

Azure Cognitive Search: Free to $3.839/hour, plus $1/1K images with volume discounts for document image extraction.

Azure Bot Service: Standard channels free; Premium channels free for 10K messages/month, S1 $0.50/1K messages.

Platform

Azure Cloud.