Credit: Dreamstime

Credit: Dreamstime

The last time I wrote about Azure Machine Learning, the old drag-and-drop designer had been deprecated, and there was a new emphasis on Python programming and Azure (Jupyter) Notebooks, essentially raising the bar on skills. The designer has now been revamped, and both the designer and AutoML given equal emphasis to Notebooks. Another improvement is the addition of R support and RStudio Server integration.

Microsoft’s own high-level summary of Azure ML emphasizes “productivity for all skill levels,” end-to-end MLOps, state-of-the-art responsible ML, and “best-in-class support for open-source frameworks and languages including MLflow, Kubeflow, ONNX, PyTorch, TensorFlow, Python, and R.” As key service capabilities, Microsoft mentions collaborative notebooks, automated machine learning, drag and drop machine learning, data labeling, MLOps, autoscaling compute, RStudio integration, deep integration with other Azure services, reinforcement learning, responsible ML, enterprise-grade security, and cost management.

Notebooks

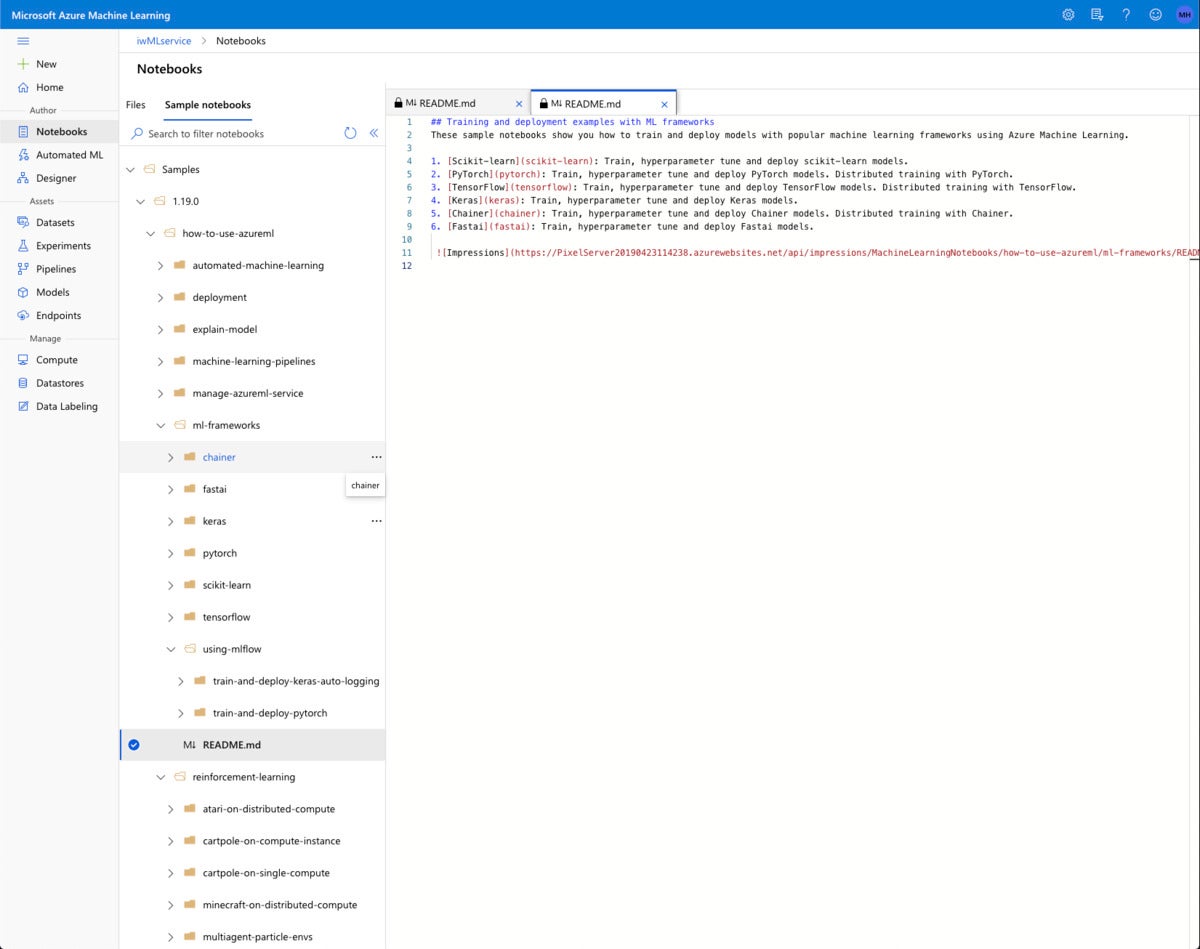

Rather than create a notebook from scratch for the purposes of this review, I started with a Microsoft sample notebook.

IDG

IDG

There are many more Azure sample notebooks now than when I looked at them in 2019. Among other improvements, they now cover more frameworks and reinforcement learning.

I chose one of the sample notebooks that demonstrate explainable machine learning. This is only one of the new capabilities of Azure Notebooks. Others include forecasting and reinforcement learning.

IDG

IDG

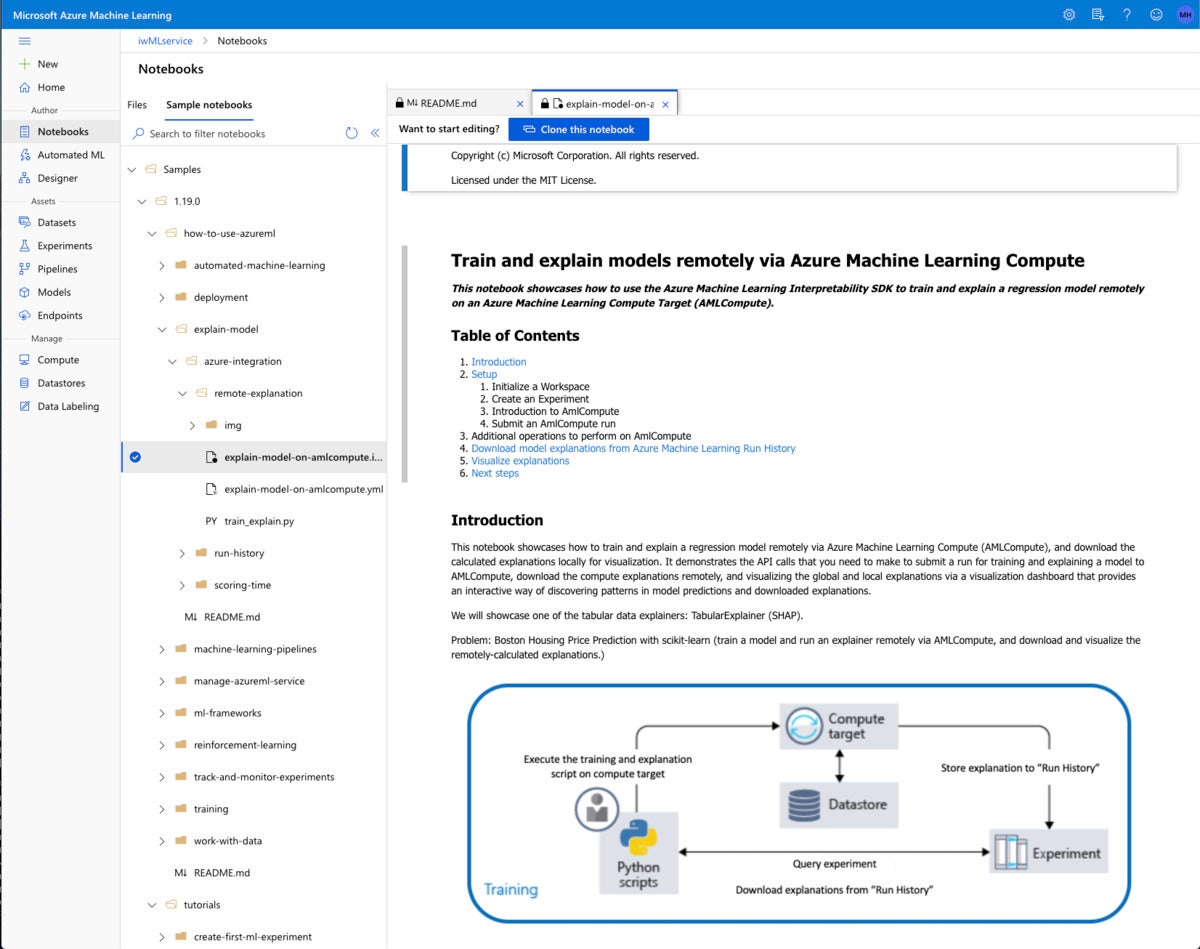

The remote-explanation sample demonstrates how to train and explain models remotely using the Azure Machine Learning Interpretability SDK.

To actually run a sample notebook, you need to clone it into your own directory and add a compute resource instance.

IDG

IDG

For the notebook itself, I picked a two-core general purpose VM with 7 GB of RAM, which costs $0.15 per hour to run.

This notebook creates an additional compute resource to run the model, a remote cluster of up to four nodes. Then it submits a script to the cluster, specifying dependencies of Pandas and Scikit-learn, and waits for the script to complete.

This run took 5 minutes 39 seconds. Much of that time was the five minutes spent waiting for the remote resource to spin up, which was annoying—but that’s the price you pay for creating compute resources on demand and releasing them when they are idle.

Finally, the notebook downloads the results from the remote cluster and lets you analyze them with the explanation dashboard. The What-if tool is comparable to Google’s, but not limited to TensorFlow models. This particular example builds a Scikit-learn model.

IDG

IDG

This is the Explanation Dashboard viewed in a separate browser tab. There are four screens in the dashboard; this is the individual feature importance and what-if screen, showing real datapoints at left and the entry form for what-if datapoints at right.

Azure Machine Learning notebooks currently have pre-release support for R and RStudio. I was able to create an RStudio environment from the Compute tab (see the first screenshot below) and clone the sample vignettes by following the instructions in the tutorial. I ran into trouble at the “load your workspace” stage, but fixed it by reinstalling the Azure Machine Learning SDK for R from GitHub. Unfortunately, I ran into more bugs at the create_estimator() call that I couldn’t fix, and gave up. (See the second screenshot below.) Oh, well: It was advertised as pre-release, and it was.

IDG

IDG

Individual compute instances are typically used for notebooks. When an instance is running, you can use the appropriate Application URI link to launch a JupyterLab, Jupyter, or RStudio environment. This particular instance was created without SSH privileges.

IDG

IDG

Azure offers pre-release support for RStudio Server open source. In going through this tutorial, I ran into a bug that appears to be a version mismatch.

Automated ML

Automated ML in Azure is not as new as it looks. As far as I can tell, it’s a nice user interface around the AutoMLConfig method that has been available in Azure Notebooks since at least early 2019. What is new under the covers? Forecasts, explanations, and featurization (automatic feature engineering).

I’ll note that Azure Automated ML currently only works on tabular or file data sets, just like Google AutoML Tables. DataRobot (see my review) is more flexible, and does AutoML using a mix of training data types, including images. While an Azure AutoML task of type image-instance-segmentation was added to the code in December 2020, I haven’t yet found it in the user interface or tutorials.

For fun, you can compare the Bike share AutoML forecasting tutorial with the notebook version of the same analysis. As a crusty old Python programmer, I prefer the notebook, but you may find the graphical interface easier for new AutoML projects.

IDG

IDG

This Azure notebook implements Auto ML forecasting. There is a similar tutorial using the automated machine learning interface.

Designer

The Azure Machine Learning Designer is a no-code/low-code graphical interface for creating pipelines. While it resembles the old Azure Machine Learning Studio interface, it uses the much more robust infrastructure now available in Azure Machine Learning, including clusters of VM-based nodes, which may have GPUs or FGPAs, as compute targets. The Designer’s graphical approach to pipeline creation is similar to KNIME, although KNIME has many more modules.

IDG

IDG

The Azure Machine Learning Designer allows you to drag and drop modules and other assets onto a canvas to create a machine learning pipeline with little or no code. This pipeline is a restaurant rating recommender provided as a sample. The Wide and Deep model, proposed by Google, jointly trains wide linear models and deep neural networks to combine the strengths of memorization and generalization.

Assets

With Azure Machine Learning datasets, you can keep a single copy of data in your storage referenced by datasets and access data during model training without worrying about connection strings or data paths.

Your machine learning experiments and the runs within each experiment are listed in the Experiments tab.

IDG

IDG

The Experiments tab lists the Azure Machine Learning experiments you have done and the runs within each experiment. If you include child runs and have done Auto ML experiments, you’ll see a lot more runs listed.

Pipelines show the Designer runs you’ve completed in the Pipeline runs tab, and diagrams you haven’t run in the Pipeline drafts tab. Azure Machine Learning pipelines can be published to get a REST endpoint that can be invoked from an external client. Clicking into a Pipeline brings up the diagram as an experiment run.

IDG

IDG

Experiment runs performed on Designer pipelines display as graphs. The Explanations and Fairness tabs are new.

Completed models are listed in the Models tab whether or not they have been deployed. You can deploy models to create web service endpoints, listed in the Endpoints tab.

Manage

You can manage your compute instances, compute clusters, inference clusters, and attached compute resources in the Compute tab. That’s a good place to check for running instances that you might want to kill before they accrue long-term charges.

Any dataset you register appears in the Datastores tab. The underlying storage may be Azure Blob storage or an Azure file share.

Azure Machine Learning data labeling gives you a central place to create, manage, and monitor labeling projects. Data labeling currently supports image classification, either multi-label or multi-class, and object identification with bounded boxes.

Data labeling tracks progress and maintains the queue of incomplete labeling tasks. This is a manual process with two optional phases of machine learning assistance: clustering and pre-labeling. The latter uses transfer learning, and may be available after only a few hundred images have been manually labeled if your categories are similar to the pre-trained model.

ONNX Runtime

The Open Neural Network Exchange (ONNX) can help optimize the inference of your trained machine learning model. Models from many frameworks, including TensorFlow, PyTorch, Scihit-learn, Keras, Chainer, MXNet, Matlab, and Spark MLlib, can be exported or converted to the standard ONNX format.

The ONNX Runtime is a high-performance inference engine for deploying ONNX models to production. It’s optimized for both cloud and edge and works on Linux, Windows, and Mac. Written in C++, it also has C, Python, C#, Java, and JavaScript (Node.js) APIs for usage in a variety of environments. ONNX Runtime supports both deep neural network and traditional machine learning models and integrates with accelerators on different hardware such as TensorRT on Nvidia GPUs, OpenVINO on Intel processors, and DirectML on Windows.

Azure Databricks

Azure Databricks provides hosted, optimized Apache Spark clusters running on Azure. Spark is a big data analytics service that includes its own machine learning libraries. Azure Databricks integrates with Azure Machine Learning, Azure Data Factory, Azure Data Lake Storage, and Azure Synapse Analytics.

Azure Cognitive Search

Azure Cognitive Search is a cloud search service with built-in AI capabilities that enrich all types of information, to identify and explore relevant content at scale. Formerly known as Azure Search, it uses the same integrated Microsoft natural language stack that Bing and Office have used for more than a decade, and AI services across vision, language, and speech. In other words, Microsoft Search uses Cognitive Search technology to offer software as a service for enterprise search within Microsoft products.

Azure Bot Service

Using bots is one way to automate or partially automate customer service and help desks. The Azure Bot Service and the Microsoft Bot Framework SDK help you to create bots and integrate them with Azure Cognitive Services. Once created, you can apply bots to channels including your website or apps, Microsoft Teams, Skype, Slack, Cortana, and Facebook Messenger.

Bots can be as sophisticated as you want to make them. You can start simple with informational bots, essentially front ends to your FAQ documents. Then you can add capabilities, for example by using the Azure Text Analytics and Language Understanding services to determine customer intent so that the bot can do customer escalations and help desk triage.

AI and machine learning on Azure

Overall, the Azure AI and Machine Learning platform—including the Azure Cognitive Services, Azure Machine Learning, the ONNX Runtime, Azure Databricks, Azure Cognitive Search, and Azure Bot Service—offers a wide range of cognitive services and a solid platform for machine learning that supports automatic machine learning, no-code/low-code machine learning, and Python-based notebooks. All of the services are very solid, and almost all services have a free tier for development and test. The only bug I found was in an experimental RStudio notebook, and that appeared to be a simple library version mismatch. (Yes, I reported the bug.)

I was impressed by the ability of Azure custom cognitive services to do transfer learning with single-digit numbers of samples, for example Custom Vision and Form Recognizer. I was also impressed by the progress the Azure Responsible AI offerings have made, although I suspect that there’s more work to be done in that area.

How does Azure AI compare to Google Cloud AI and Amazon’s AI and machine learning offerings? The quality and scope are similar; you can’t really go wrong with any of them. While I haven’t yet absorbed all of the improvements that AWS announced at re:Invent in December 2020, I can say that one differentiator between Azure and Google cognitive services is that Azure allows you to run cognitive service containers on premises, while Google currently lacks on-prem options.

—

Pricing

[All dollar values in USD unless otherwise stated]

Note: In general, production services have a 99.9% SLA.

Anomaly detection: 20K free transactions/month (10 calls/second dev/test); $0.314/1K transactions/month (80 calls/second production).

Content Moderator: 5K free transactions/month (1 transaction/second dev/test); $1/1K transactions/month (10 transactions/second production) + volume discounts over 1M transactions/month.

Metrics Advisor: No charge during preview.

Personalizer: 50K free transactions/month (10 GB storage); $1/1K transactions/month + volume discounts over 1M transactions/month.

Immersive Reader: No charge during preview.

Language Understanding: 10K free transactions/month; (5 TPS dev/test); $1.50/1K transactions/month text, $5.50/1K transactions/month speech (50 TPS production).

QnA Maker: 3 managed documents free each month (dev/test); $10 for unlimited managed documents. QnA also requires an App Service (free to $0.285/hour) and a Cognitive Search service (free to $7.667/hour).

Text Analytics: 5K free transactions/month; standard $1/1K text records with volume discounts; tiers from $74.71/month for 25K transactions to $5K/month for 10M transactions.

Translator: 2M chars free/month; $10 per million chars of standard translation, $40 per million chars of custom translation; standard translation tiers from $2K/month for 250M chars to $45K/month for 10B chars, custom translation tiers from $2K/month for 62.5M chars to $45K/month for 2.5B chars.

Speech to Text: 5 audio hours free per month, 1 concurrent request; $1 per audio hour, 20 concurrent requests.

Text to Speech: 5 million characters free per month standard, 0.5 million characters free per month neural; $4 per 1M characters standard, $16 per 1M characters neural, $100 per 1M characters neural long audio.

Speech Translation: 5 audio hours free per month; $2.50 per audio hour.

Computer Vision: 5K transactions free per month (20/minute); $1 to $1.50 per 1K transactions (10 TPS) with volume discounts.

Custom Vision: 1 hour training, 5K training images, and 10K predictions free/month (2 TPS); $20/compute hour training, $2 per 1K transactions, $0.70 per 1K images storage (10 TPS).

Face: 30K free transactions/month (20 TPM); $1 per 1K transactions with volume discounts (10 TPS).

Form Recognizer: 500 pages free per month; $10 to $50 per 1K pages depending on form type.

Video Indexer: 10 hours of free indexing to website users and 40 hours of free indexing to API users; $0.15/minute for video analysis, $0.04/minute for audio analysis.

Azure Machine Learning: $0.016 to $26.688/hour depending on number of CPUs, GPUs, and FPGAs, and amount of RAM; storage costs additional. VM costs only; No extra charge for machine learning service.

Azure Databricks: Combination of VM and service costs. See pricing details.

Azure Cognitive Search: Free to $3.839/hour, plus $1/1K images with volume discounts for document image extraction.

Azure Bot Service: Standard channels free; Premium channels free for 10K messages/month, S1 $0.50/1K messages.

Platform

Azure Cloud.